Neuromorphic Computing

I got nerdsniped by neuromorphic computing last year. I came across it on ARIA’s “Nature Computes Better” research stream. The premise was simple: biology is efficient. Brains run on 20 watts. They do vision, language, and control in real time. So why aren’t our computers more like that?

And when I went down this rabbit hole, it was obvious that this was a real field with strange, brain-adjacent roots that’s just now snapping into focus. We may be witnessing neuromorphic computing’s AlexNet moment—a convergence of credible algorithms, shipping silicon, and real-world wins that make the field suddenly feel less like a science fiction garnish and more like a necessary layer of the AI stack.

And almost no one is talking about it??

Clocks to spikes

Almost all of modern computing is built on one abstraction: the clock. CPUs, GPUs, TPUs—everything ticks forward in sync. It’s simple and general-purpose. But it’s not how biology works.

In your brain, nothing is constantly “running.” A flash of light, a sudden sound, or movement in your periphery sets off a cascade. A few sensory neurons fire, activating others, eventually triggering a motor plan or thought. Computation is conditional—not continuous.

Think about your visual cortex. You’re not processing every pixel of your view, every millisecond. If nothing changes, almost nothing fires. But the instant something enters your field—say, a ball flying toward you—it triggers a sparse, rapid, localized burst of activity. Your brain reacts, plans, and moves—all faster than your laptop renders a frame.

So what if our hardware did the same? What if computation were event-driven instead of clock-driven?

That’s the core of neuromorphic computing. No global clock. Neuromorphic chips idle by default. They don’t step forward every cycle. Instead, they wait—until a signal arrives. Memory and compute are colocated, like synapses and dendrites. When something spikes, it triggers a brief, local computation. No polling loop. No idle churn. Just the minimum work needed, exactly where it’s needed.

It’s not just a different chip. It’s a different computational rhythm.

And that opens up a very different design space.

The hardware is here

Loihi 2 isn’t just a research chip—It runs in real time, with precise control over neuron dynamics, learning rules, and routing between cores. It’s programmable. You can actually write code for it.

IBM’s NorthPole skips spikes entirely but implements an event-driven vision fabric directly on-chip—merging compute and memory into a new kind of inference substrate.

Meanwhile, companies like Rain AI and SynSense are building chips explicitly for edge workloads: vision in smart sensors, audio processing on a hearing aid, gesture recognition in an AR headset. They’re not trying to compete with GPUs on throughput—they’re solving for latency, sparsity, and power. For once, the silicon reflects the use case.

The models learns now

We’re past the phase of manually tweaking STDP curves and hoping for emergent behavior.

Spiking neural networks can now be trained end-to-end with surrogate gradients. You can backpropagate through discrete spike trains using differentiable approximations, just like you would in PyTorch or JAX.

Liquid time-constant networks (LTCs) let you model complex dynamics without tuning dozens of knobs by hand. Spiking state-space models give you temporal expressiveness with stability. These aren’t just biologically plausible—they’re numerically stable and performant on real tasks. They learn.

Where neuromorphics thrives

These systems aren’t trying to replace GPUs in data centers. They’re unlocking performance in places where power and latency are the bottlenecks:

Prosthetics: Real-time motor control and sensory feedback, without draining battery—enabling smarter, faster, and more responsive prosthetic limbs.

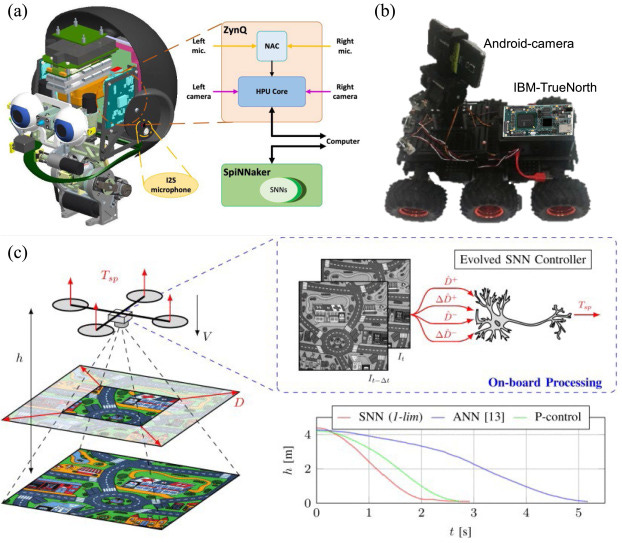

Drones: Event-based vision that tracks objects or dodges obstacles at microjoules per frame.

Autonomous vehicles: Sensor fusion and hazard detection at the edge, faster than frame-based CNNs.

Wearables and BCIs: Decoding biosignals (EEG, EMG, ECG) on-device with sub-millisecond latency.

Audio keyword spotting: Always-on audio that lasts weeks on a charge—ideal for earbuds and watches.

Industrial monitoring: Fault detection in noisy, power-constrained environments, where cloud offloading isn’t an option.

These aren’t speculative. Many of these workloads are already running on neuromorphic chips in research labs and pilot deployments. What they share is a need for ultra-low power, low-latency, always-on compute—the sweet spot for neuromorphic systems.

It’s easy to write this off as niche or impractical. But inference costs are real now. Latency matters—and energy matters.

We’re hitting the limits of what you can get out of brute force scaling. And most ML systems today are fundamentally overbuilt for tasks that brains solve with minimal power and no retraining.

Neuromorphic computing isn’t trying to beat transformers at GPT-5.

But it is a plausible substrate for what comes after:

continual learning

power-aware control systems

agents that live outside the cloud

new hybrids of symbolic, analog, and learned computation

If you’ve ever worked on something that felt pre-paradigmatic (I have)—before the tooling, before the ecosystem—this has that texture.

There are maybe a few hundred people in the world who know how to build with this stuff. The papers still start from scratch. It’s not plug-and-play. But it’s buildable. Which means it’s steerable.

There’s no TensorFlow for neuromorphics. No LLVM equivalent. No dominant SDK, training loop, or compiler stack. That means there’s still space to invent the ecosystem, not just use it.

If you’re into architecture, compilers, runtime systems, or even UX for new dev tools—this is a rare window to shape how an entire new class of machines comes online. This field is unusually wide open.

—

P.S. I’m running an experimental neuromorphics residency program, exploring projects ranging from real-time drone control to chip design. Consider applying!